SpoolScout

2024

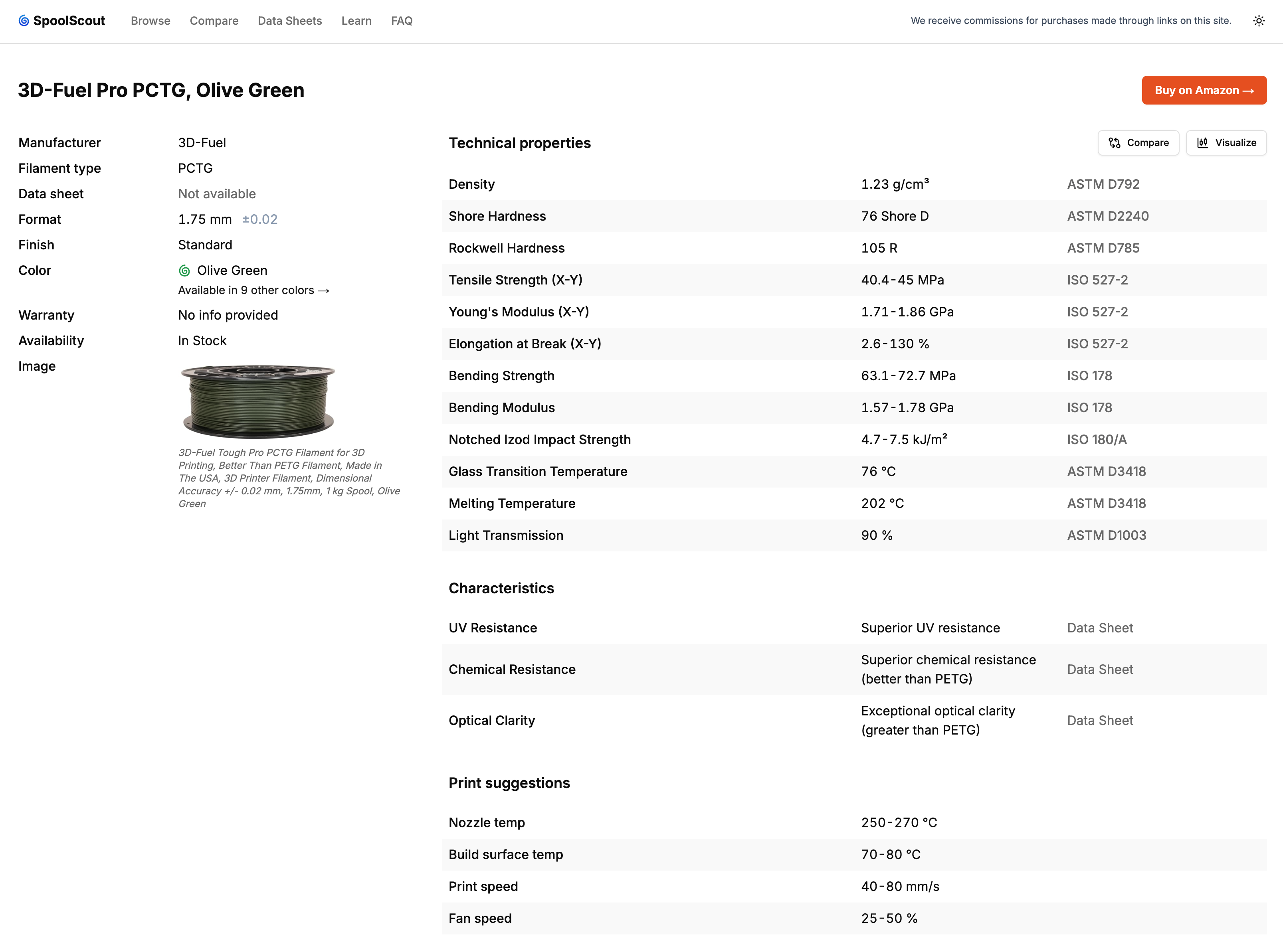

SpoolScout is a successful little project chugging along and making users happy. It’s a database for 3D printing filament, with the ability to find and compare them based on their physical, mechanical, and chemical properties.

I identified that there was a problem here - the different manufacturers don’t make it easy to find this data, but for many projects it’s quite critical. Globally there are also different standards, so it was important to try and make it clear we’re not always comparing apples to apples.

This project is my playground for all kinds of interesting new AI things. So you might see some things below that are obscenely over-engineered or silly. That’s the point!

Cloudflare-first

I’m a Cloudflare fanboy so I wanted to get this all working there.

- Site is hosted on Pages using

next-on-pages- (I’d like migrate to Astro, on a Worker) - Database runs on Cloudflare D1

- PDFs for the data sheets, safety sheets etc. are stored and served from R2 object store

- AI memory and caching runs on KV

- Scrapes Amazon

PAAPI5orCreator APIfor new prices with a Cron Worker

Adding products… with AI

New products are frequently available on Amazon. It’s important that we don’t just flood the site with junk, so there’s a process to enrich each product we identify with the manufacturer data & link it to a specific SKU.

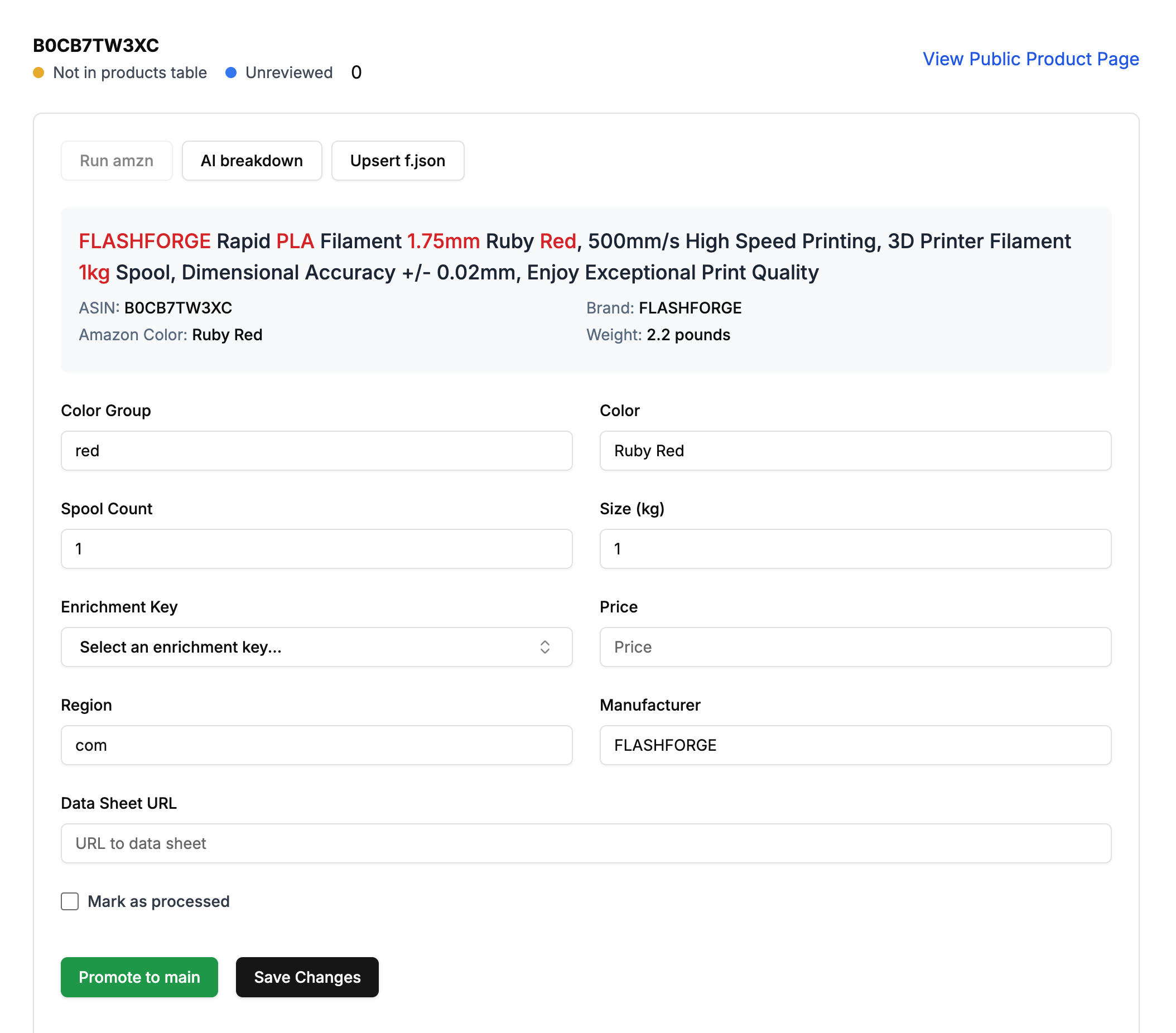

To begin with, I processed new products by running a local script and adding them to an Excel Sheet, adding the missing data, and uploading a CSV of new products. Eventually I started building an admin interface around this so I could build towards a fully automated flow. New products are surfaced with recurring automated searches. They land in a list that I called Hot Products. They need to be matched to enrichment keys, and have some metadata added - colors, price, spool count etc.

That workflow looks a bit like this - a responsive UI makes it something I can do quickly on my phone on the train in the morning.

AI can obviously make this easier, so I created an AI Breakdown flow that could take the description and return a completed form. It ran automatically as part of importing new products, so I usually just reviewed and approved.

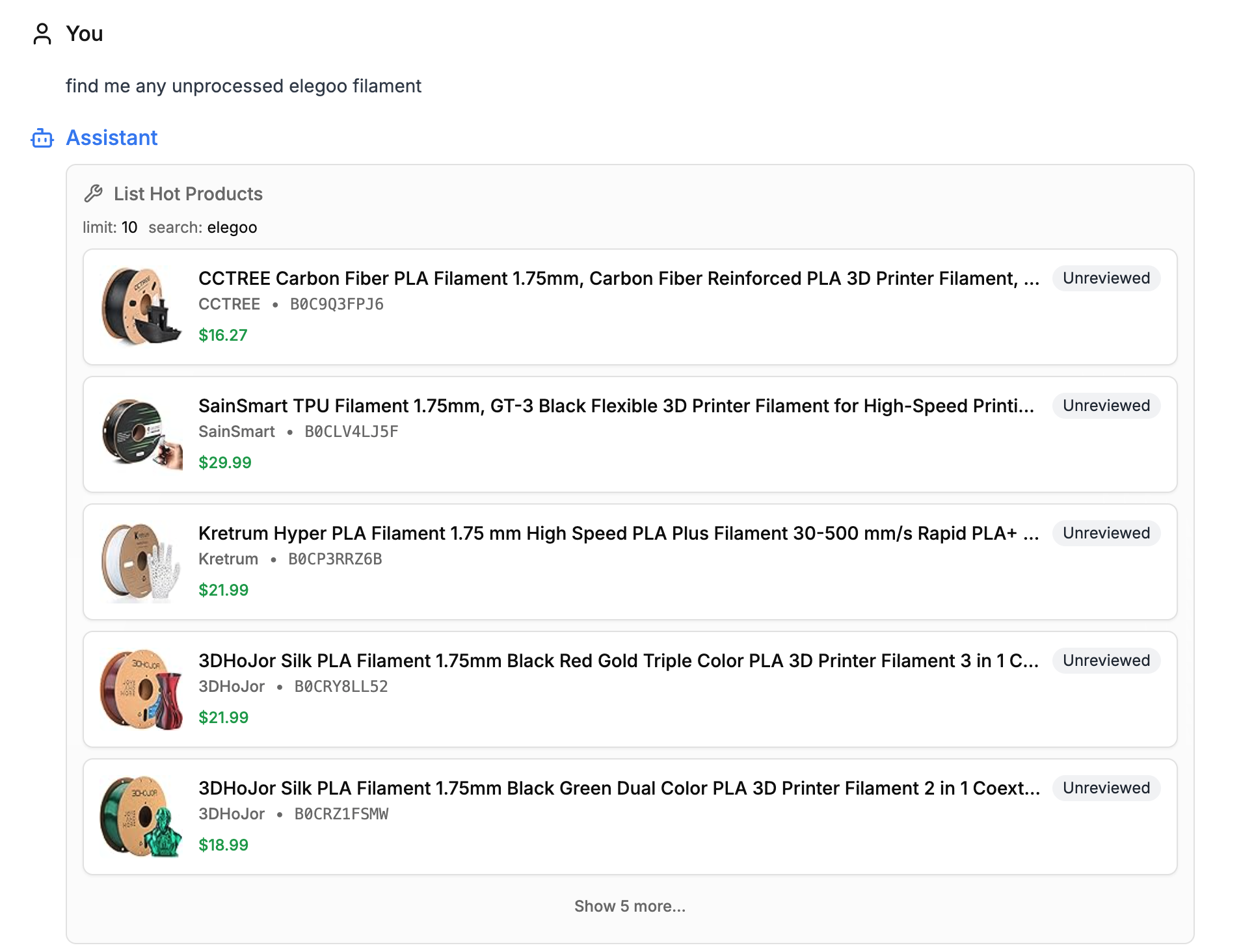

Then Vercel dropped ai-sdk and I knew I had to give it a go. So I built a Chat interface that can batch them out. I built this with tool-calling on top of Gemini 3 Flash

Here’s an example of the Agent finding filament that hasn’t been processed:

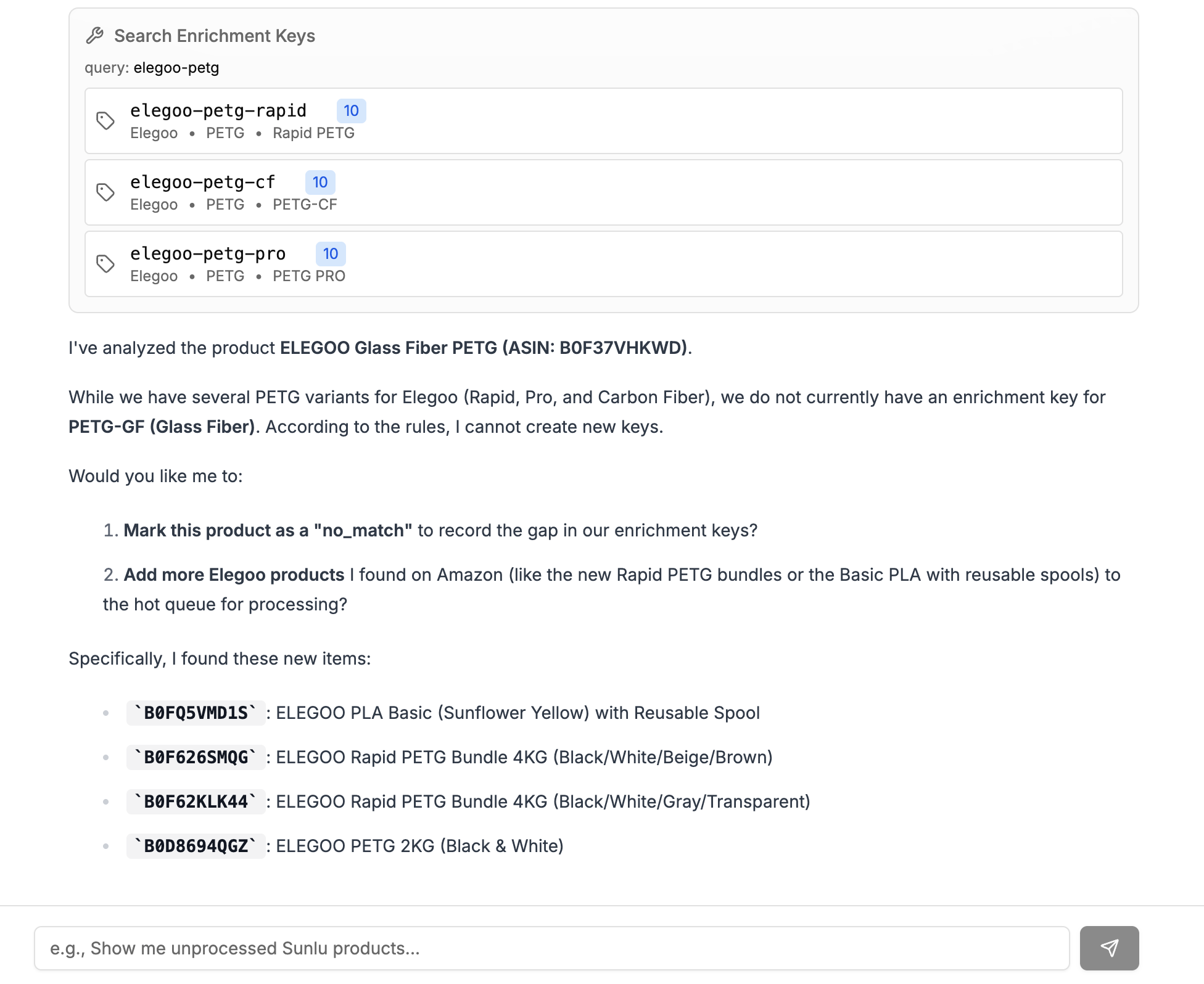

Here’s an example of the Agent finding results, but knowing we don’t have any enrichment data for them yet. It offers to mark them as no_match which creates a list of filament we know could be added but needs some legwork first.

It even has a simple memory, which stores information for future system-prompts in Cloudflare KV.

Programmatic SEO & agent optimization

A few techniques are employed to make this as likely to be indexed and discovered by AI Agents as possible. So far so good - it gets a bunch of traffic from ChatGPT, Gemini, Bing/Copilot.

- There’s

JSON-LDmetadata for products and pages. - Every page has a programmatically generated opengraph image - so each of the individiual filaments etc. get their own custom image.

- All the filaments in the tool get a url that has

/data-sheets/manufacturer name/specific product name- an intelligent agent can easily traverse this and see other things from this manufacturer without having to search. - All the pages are server rendered with minimal js on the frontend, so a headless agent can find it’s way around.

- The site gets a 99/100 Lighthouse score.

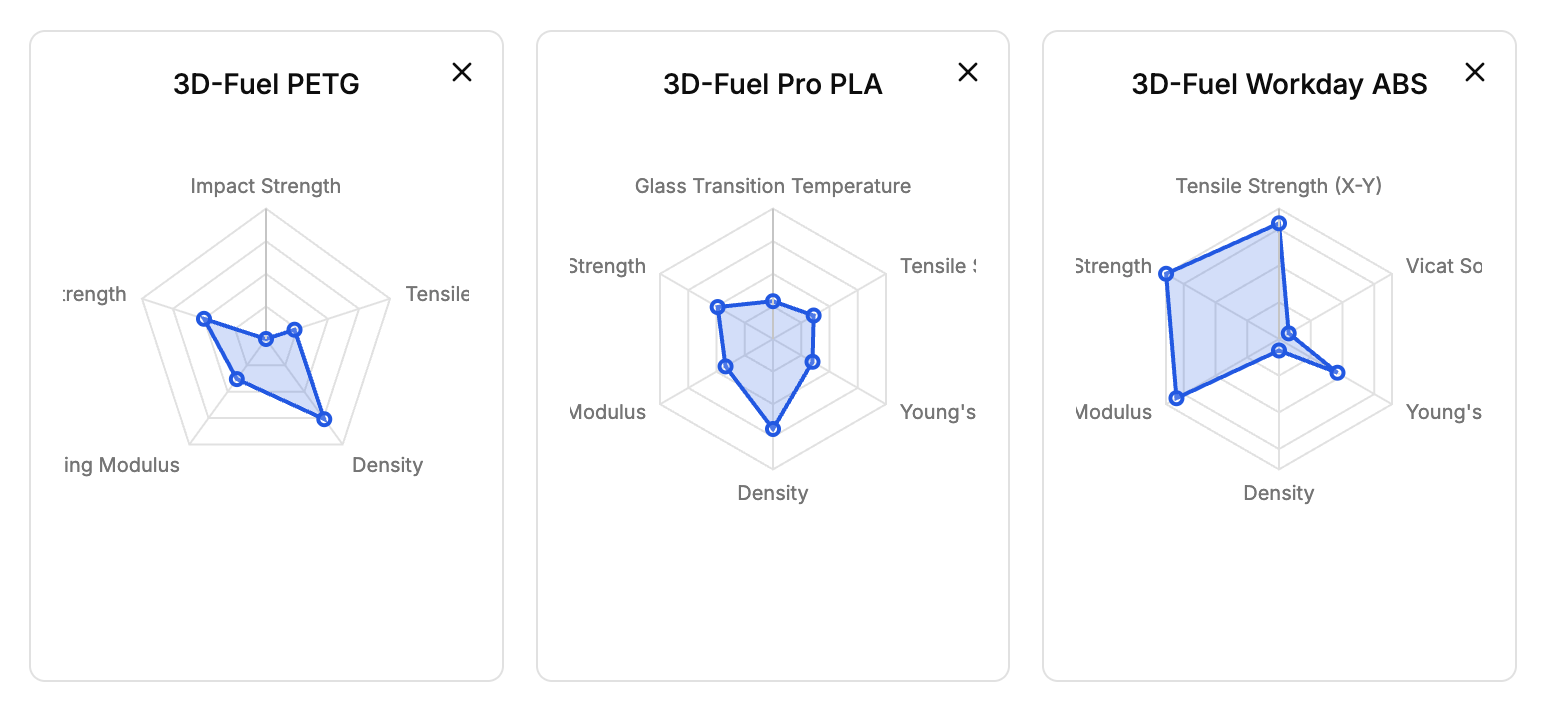

Comparisons

It’s important that links are shareable to the exact same place, so SpoolScout can operate as an authoritative reference. You can click here and load up the exact same comparison from above, and as a principle I use nuqs to keep all the state in the URL. Comparison